A year has passed since the CrowdStrike outage caused widespread disruption to enterprise IT systems and infrastructure. From grounded planes to hospital system outages, the July 2024 incident reminded the world just how fragile our digital ecosystems can be.

In this post, we look back on the incident, unpack the key lessons, and explore what steps companies should be taking to avoid becoming collateral damage in the next tech meltdown.

Understanding the Incident: Not a Breach, But a Breakdown

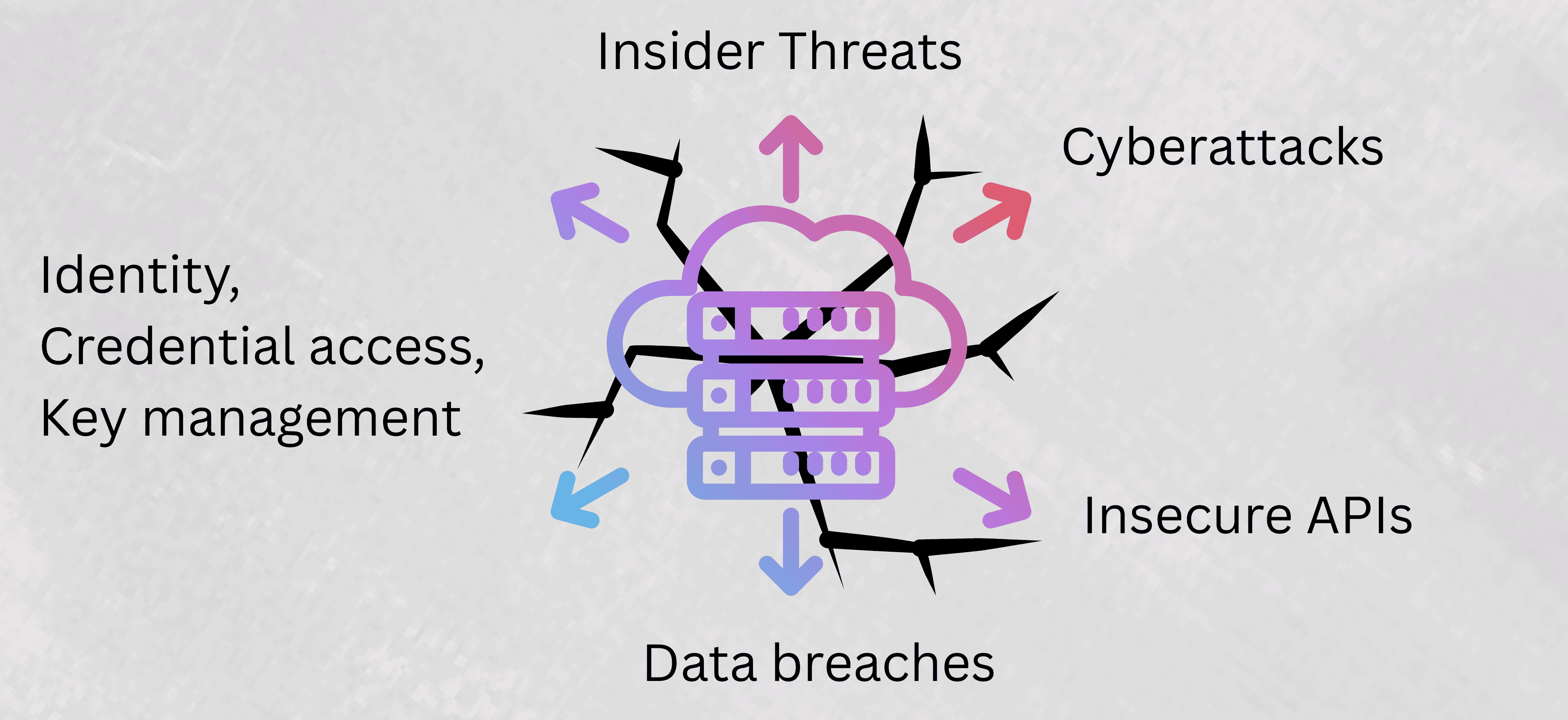

Crowdstrike incident wasn't a cyberattack but a software deployment mishap that cascaded through global networks. Within hours, businesses globally were affected, with mass outages impacting critical services. Organizations using platforms like AWS, Azure, or Google Cloud experienced varying levels of disruption, depending on their endpoint protection configurations and redundancy setups.

While the company responded swiftly, the incident exposed gaps in update deployment and quality assurance, particularly in high-stakes cloud operations.

Lessons Learned:

- Third-Party Dependencies: Cloud ecosystems often rely on vendors like CrowdStrike for security integrations. It's time to diversify critical toolsets where possible, and ensure business continuity planning includes vendor failure scenarios.

- Update Pipelines Must Be Bulletproof: Organizations are increasingly adopting canary deployments, releasing changes to a limited set of systems first and leveraging sandbox environments for isolated testing before full-scale rollout.

- Expand Incident Response: Cloud architectures must prioritise multi-region failover, automated backups, and independent disaster recovery plans. Incorporating AI-driven monitoring and anomaly detection can also enable faster identification and mitigation of cascading issues.

- Human Error and Organizational Culture: The incident highlights how human factors like insufficient training, oversight, or rushed deployment processes can lead to major security failures. Preventing these “silent killers,” such as misconfigurations, requires cultivating a culture of diligence, continuous learning, regular audits, and shared accountability across teams.

Strategic Precautions for Cloud Management

Architect for Failure, Not Just Availability

Most organizations design for uptime. Far fewer design for controlled failure, the ability to isolate, contain, and recover from a breakdown before it escalates into an outage. Cloud architectures must assume that failure will happen, and plan for fault isolation, auto-remediation, and graceful degradation.

Following precautions can be taken to avoid breakouts:

- Multi-region and multi-zone redundancy with intelligent routing

Service mesh architectures for better observability and traffic control in microservices.

Chaos engineering to simulate failure scenarios and test response plans in real-world conditions.

Dependency Mapping and Blast Radius Minimisation

- Conduct a dependency inventory: Understand what services depend on what vendors, and how tightly those dependencies are coupled.

- Implement segmentation and service isolation: Avoid organization wide rollouts of critical tools without staging and isolation.

- Use software bill of materials (SBOMs) for your supply chain: Know what's inside every component of your application stack.

Strengthen Change Management

Formalize staged rollouts with progressive delivery tools.

Require pre-deployment signoffs for changes affecting shared infrastructure or security systems.

Use infrastructure as code (IaC) and policy-as-code to enforce guardrails in CI/CD pipelines.

As enterprises scale in the cloud, every new tool, integration, or automation introduces new potential points of failure. The solution isn’t to slow down, but to build smarter, with resilience and accountability at every layer.

Made with Superblog

Made with Superblog